DataX 是阿里开源的异构数据源离线同步工具,致力于实现包括关系型数据库(MySQL、Oracle等)、HDFS、Hive、ODPS、HBase、FTP等各种异构数据源之间稳定高效的数据同步功能。阿里云开源离线同步工具DataX3.0介绍

在2015年的时候,项目有用到DataX1.0来开发提数功能,使用很方便,我们自己也扩展一些插件,如DB2Writer、DB2Reader、GBaseWriter、GBaseReader等,不得不说,DataX的插件功能真的很强大很灵活。

再到后来,2018年的时候,涉及到跨库数据定时同步。因为当时所涉及的数据量不大,也就几万,最多十几万的数据处理和同步。所以选用了Kettle,这款工具上手也比较快,图形化操作,我们在GUI中设计好我们的ktr和kjb,在定时通过命令的方式来执行kjb的方式实现定时ETL数据处理、同步功能。

但是,Kettle已经满足不了我们了,因为我们要把上亿的指标数据从DB2同步到GBase中,当然比较好的方式多是从DB2导出文件然后load到GBase中,但是目前权限不够,也因为只有一台主机,没办法做相应的操作。那么就又得请出我们的DataX杀手锏。

手动编译DataX

工具:

- Maven

- Git

- Idea

- Jdk1.8

当然你也可以不用Git和Idea直接下载下来执行Maven命令即可.

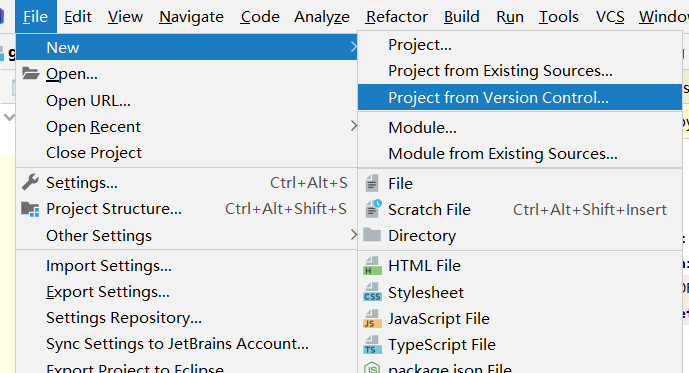

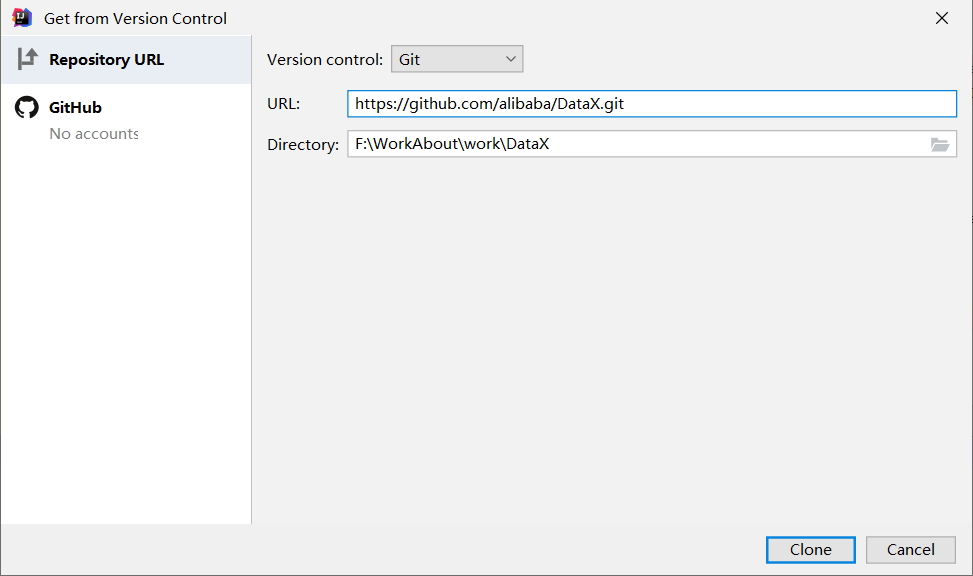

使用Idea导入DataX:

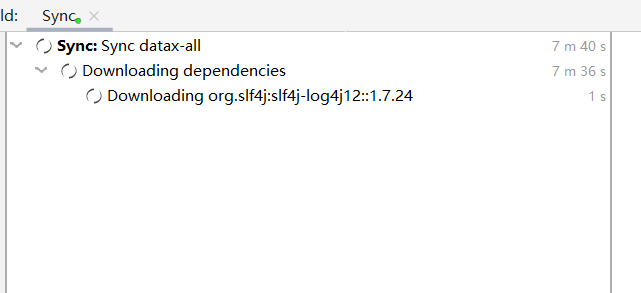

点击clone后,就会下载代码,下载完成后就会下载依赖包。

等待依赖包安装完成,执行打包命令:打包成功后,会在对应目录下生成target文件夹.我这儿打包好的文件有1.12G,因为里面包含了很多插件以及插件所需要的依赖包。我只留下了mysql和rdbms两个插件。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107mvn -U clean package assembly:assembly -Dmaven.test.skip=true

[INFO] Reading assembly descriptor: package.xml

[INFO] datax/lib\commons-io-2.4.jar already added, skipping

[INFO] datax/lib\commons-lang3-3.3.2.jar already added, skipping

[INFO] datax/lib\commons-math3-3.1.1.jar already added, skipping

[INFO] datax/lib\datax-common-0.0.1-SNAPSHOT.jar already added, skipping

[INFO] datax/lib\datax-transformer-0.0.1-SNAPSHOT.jar already added, skipping

[INFO] datax/lib\fastjson-1.1.46.sec01.jar already added, skipping

[INFO] datax/lib\hamcrest-core-1.3.jar already added, skipping

[INFO] datax/lib\logback-classic-1.0.13.jar already added, skipping

[INFO] datax/lib\logback-core-1.0.13.jar already added, skipping

[INFO] datax/lib\slf4j-api-1.7.10.jar already added, skipping

[INFO] Building tar : F:\WorkAbout\work\DataX\target\datax.tar.gz

[INFO] datax/lib\commons-io-2.4.jar already added, skipping

[INFO] datax/lib\commons-lang3-3.3.2.jar already added, skipping

[INFO] datax/lib\commons-math3-3.1.1.jar already added, skipping

[INFO] datax/lib\datax-common-0.0.1-SNAPSHOT.jar already added, skipping

[INFO] datax/lib\datax-transformer-0.0.1-SNAPSHOT.jar already added, skipping

[INFO] datax/lib\fastjson-1.1.46.sec01.jar already added, skipping

[INFO] datax/lib\hamcrest-core-1.3.jar already added, skipping

[INFO] datax/lib\logback-classic-1.0.13.jar already added, skipping

[INFO] datax/lib\logback-core-1.0.13.jar already added, skipping

[INFO] datax/lib\slf4j-api-1.7.10.jar already added, skipping

[INFO] datax/lib\commons-io-2.4.jar already added, skipping

[INFO] datax/lib\commons-lang3-3.3.2.jar already added, skipping

[INFO] datax/lib\commons-math3-3.1.1.jar already added, skipping

[INFO] datax/lib\datax-common-0.0.1-SNAPSHOT.jar already added, skipping

[INFO] datax/lib\datax-transformer-0.0.1-SNAPSHOT.jar already added, skipping

[INFO] datax/lib\fastjson-1.1.46.sec01.jar already added, skipping

[INFO] datax/lib\hamcrest-core-1.3.jar already added, skipping

[INFO] datax/lib\logback-classic-1.0.13.jar already added, skipping

[INFO] datax/lib\logback-core-1.0.13.jar already added, skipping

[INFO] datax/lib\slf4j-api-1.7.10.jar already added, skipping

[INFO] Copying files to F:\WorkAbout\work\DataX\target\datax

[INFO] datax/lib\commons-io-2.4.jar already added, skipping

[INFO] datax/lib\commons-lang3-3.3.2.jar already added, skipping

[INFO] datax/lib\commons-math3-3.1.1.jar already added, skipping

[INFO] datax/lib\datax-common-0.0.1-SNAPSHOT.jar already added, skipping

[INFO] datax/lib\datax-transformer-0.0.1-SNAPSHOT.jar already added, skipping

[INFO] datax/lib\fastjson-1.1.46.sec01.jar already added, skipping

[INFO] datax/lib\hamcrest-core-1.3.jar already added, skipping

[INFO] datax/lib\logback-classic-1.0.13.jar already added, skipping

[INFO] datax/lib\logback-core-1.0.13.jar already added, skipping

[INFO] datax/lib\slf4j-api-1.7.10.jar already added, skipping

[WARNING] Assembly file: F:\WorkAbout\work\DataX\target\datax is not a regular file (it may be a directory). It cannot be attached to the project build for installation or deployment.

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] datax-all 0.0.1-SNAPSHOT ........................... SUCCESS [14:23 min]

[INFO] datax-common ....................................... SUCCESS [ 3.743 s]

[INFO] datax-transformer .................................. SUCCESS [ 4.746 s]

[INFO] datax-core ......................................... SUCCESS [ 35.608 s]

[INFO] plugin-rdbms-util .................................. SUCCESS [ 2.233 s]

[INFO] mysqlreader ........................................ SUCCESS [ 3.712 s]

[INFO] drdsreader ......................................... SUCCESS [ 2.849 s]

[INFO] sqlserverreader .................................... SUCCESS [ 2.668 s]

[INFO] postgresqlreader ................................... SUCCESS [ 3.018 s]

[INFO] oraclereader ....................................... SUCCESS [ 2.720 s]

[INFO] odpsreader ......................................... SUCCESS [ 4.960 s]

[INFO] otsreader .......................................... SUCCESS [ 5.210 s]

[INFO] otsstreamreader .................................... SUCCESS [ 4.729 s]

[INFO] plugin-unstructured-storage-util ................... SUCCESS [ 1.542 s]

[INFO] txtfilereader ...................................... SUCCESS [ 11.342 s]

[INFO] hdfsreader ......................................... SUCCESS [ 36.736 s]

[INFO] streamreader ....................................... SUCCESS [ 2.925 s]

[INFO] ossreader .......................................... SUCCESS [ 12.321 s]

[INFO] ftpreader .......................................... SUCCESS [ 12.288 s]

[INFO] mongodbreader ...................................... SUCCESS [ 11.568 s]

[INFO] rdbmsreader ........................................ SUCCESS [ 3.784 s]

[INFO] hbase11xreader ..................................... SUCCESS [ 15.342 s]

[INFO] hbase094xreader .................................... SUCCESS [ 13.149 s]

[INFO] tsdbreader ......................................... SUCCESS [ 3.722 s]

[INFO] opentsdbreader ..................................... SUCCESS [ 6.592 s]

[INFO] cassandrareader .................................... SUCCESS [ 11.834 s]

[INFO] mysqlwriter ........................................ SUCCESS [ 3.282 s]

[INFO] drdswriter ......................................... SUCCESS [ 9.150 s]

[INFO] odpswriter ......................................... SUCCESS [ 13.498 s]

[INFO] txtfilewriter ...................................... SUCCESS [ 21.791 s]

[INFO] ftpwriter .......................................... SUCCESS [ 19.378 s]

[INFO] hdfswriter ......................................... SUCCESS [ 32.956 s]

[INFO] streamwriter ....................................... SUCCESS [ 5.611 s]

[INFO] otswriter .......................................... SUCCESS [ 5.545 s]

[INFO] oraclewriter ....................................... SUCCESS [ 4.152 s]

[INFO] sqlserverwriter .................................... SUCCESS [ 2.689 s]

[INFO] postgresqlwriter ................................... SUCCESS [ 2.502 s]

[INFO] osswriter .......................................... SUCCESS [ 12.467 s]

[INFO] mongodbwriter ...................................... SUCCESS [ 12.070 s]

[INFO] adswriter .......................................... SUCCESS [ 9.578 s]

[INFO] ocswriter .......................................... SUCCESS [ 5.485 s]

[INFO] rdbmswriter ........................................ SUCCESS [ 2.927 s]

[INFO] hbase11xwriter ..................................... SUCCESS [ 15.926 s]

[INFO] hbase094xwriter .................................... SUCCESS [ 15.623 s]

[INFO] hbase11xsqlwriter .................................. SUCCESS [ 27.912 s]

[INFO] hbase11xsqlreader .................................. SUCCESS [ 35.410 s]

[INFO] elasticsearchwriter ................................ SUCCESS [ 7.341 s]

[INFO] tsdbwriter ......................................... SUCCESS [ 3.051 s]

[INFO] adbpgwriter ........................................ SUCCESS [ 5.857 s]

[INFO] gdbwriter .......................................... SUCCESS [ 11.935 s]

[INFO] cassandrawriter .................................... SUCCESS [ 6.054 s]

[INFO] hbase20xsqlreader .................................. SUCCESS [ 3.264 s]

[INFO] hbase20xsqlwriter 0.0.1-SNAPSHOT ................... SUCCESS [ 3.577 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 23:03 min

[INFO] Finished at: 2019-12-18T11:59:17+08:00

[INFO] ------------------------------------------------------------------------

因为我是按照的Python3.6.5如果直接使用官网的命令的话,会报错:

1 | F:\WorkAbout\work\DataX\target\datax\datax\bin>python datax.py -r mysqlreader -w mysqlwriter |

所以,咱们得转换一下啦,Python3.x已经为我们提供了2to3的工具,在安装目录下面的Tools\scripts中,来体验一下它的强大:

1 | D:\Programs\Python\Python36-32\Tools\scripts>2to3.py --output-dir=D:/datax -W -n F:\WorkAbout\work\DataX\target\datax\datax\bin\datax.py |

我将转到好的py文件更名为datax3.py,复制到bin目录下面,重新执行命令:

1 | F:\WorkAbout\work\DataX\target\datax\datax\bin>python datax3.py -r mysqlreader -w mysqlwriter |

我们可以把命令执行结果保存在文件中:

1 | python datax3.py -r mysqlreader -w mysqlwriter > mysql2gbase.json |

会在当前目录生成mysql2gbase.json文件。

做相应的配置即可:

1 | { |

执行同步命令python datax3.py mysql2gbase.json

1 |

|

上面只是一个简单的示例,接下来。我们将进入正式实践环节。首先需要开发一个GbaseWriter,因为没有涉及到太多细节的处理,直接按照DataX插件开发宝典开发一个,打包插件mvn -U clean package assembly:assembly -pl gbasewriter -am -Dmaven.test.skip=true,然后执行:python datax.py -r mysqlreader -w gbasewriter,按照实际情况配置即可,运行效果如下:

1 | DataX (DATAX-OPENSOURCE-3.0), From Alibaba ! |

没办法,机器太low。这并不是Datax真实的效果。现在就可以把Datax放在服务器上去同步数据了。